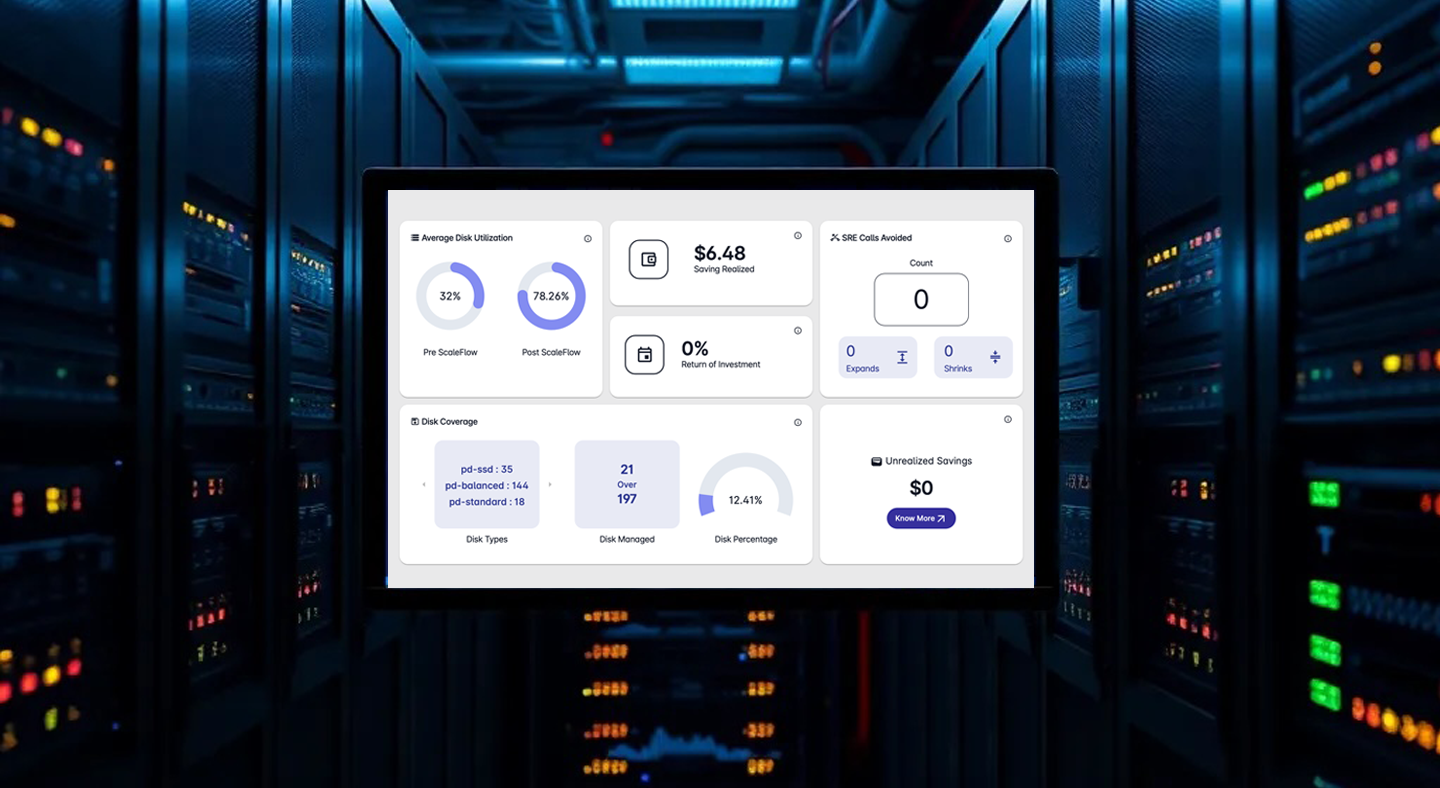

Thrilled to be part of India’s most prestigious gathering of cybersecurity and IT decision makers! As an Exhibit Partner, the energy at our booth is electric - from thought provoking conversations to live demos of our cloud and security solutions. 🙌

Learn more